- #Extract all links from page install

- #Extract all links from page code

- #Extract all links from page series

Now that we’ve got a set of new links, we need to introduce a queue-like system to process links one by one recursively until we’ve processed all the hyperlinks on the site. This can be done with a simple for loop… for link in links: Using these links we can create an edge between the current link and the new links. Using these rules leaves us with the following code… # Find all 'a' tags Ensure that link is not the same as the current : To keep things simple, we exclude links which point to themselves.Check if the link is not internal : To ensure that we don’t crawl the entire web, we need to make sure that we only collect links that are local to our website of interest.In our case, we remove this and only select the main link. Remove ‘#’ from link : Often enough, the # is used to redirect the user to a certain part of the page (e.g.Check link contains ‘href’ attribute : In order to extract hyperlinks, the a tag needs a valid ’ href ’ as this attribute is used to store the URL of the linked page.Now that we have extracted all the links from the website, we need to filter the links for the following issues: Soup = BeautifulSoup(req.content, 'html.parser')

#Extract all links from page code

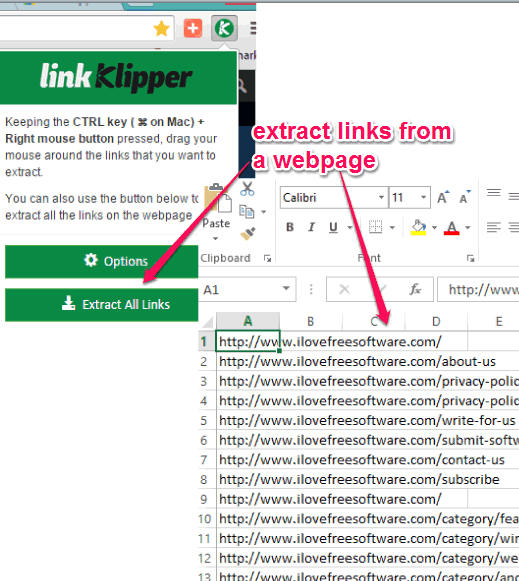

This is achieved using BeautifulSoup with the following code to extract all a tags found on the website… domain = '' To do this we need to collect all a tags embedded in the website from the initial/starting point domain or “root domain”. Now that the packages have been loaded in, we can start collecting links from our website. That’s quite easy to do… G = nx.DiGraph() We will also need to initialise an empty directed graph. To start, we need to import our packages… import requests Now that these are installed, we can begin to put some code together.

#Extract all links from page install

$ pip install networkx pandas requests beautifulsoup4

networkx : For modelling the networks (if you don’t know this by now 😉 ).If you haven’t done so already, you need to install the following packages: To get things, going we need to install a few packages that will help us make the HTTP requests and to parse the HTML document. Create edge between current link and next.Find all HTML a tags and select those that are within the same website.Now that we know, why hyperlink networks are important, we can begin to extract the data we need using a simple Python script. This is particularly important for SEO (search engine optimisation) as it allows you to construct a website in such a way that information is easily accessible. The same can be said for blog posts that link to other relevant blog posts.

Therefore, highly-connected hyperlink networks suggest that information can be accessed with ease using very few clicks.įor example, the use of tags (simple keywords which describe a post) allows users to access relevant blog posts under a specific topic. The positioning of hyperlinks is important as they determine how well a user can find the material they need. They are used to allow the user to access certain information when clicked. Hyperlinks determine how a user navigates around a website. Otherwise, we might as well just crawl the entire web 😉 Why are Hyperlink Networks important? That means only considering a link if it has the same domain. To make things easier, we will only crawl pages on the same website. Here is what a simple hyperlink network, may look like. The general idea is that the websites are nodes and the hyperlinks connecting them form the edges. If you’ve been on this blog before, you’ll know that hyperlinks and web pages make great networks.

#Extract all links from page series

They allow us to navigate through web pages with a series of simple clicks.

They are a fundamental complete to the world wide web. If you use the internet often enough (which, if you’re reading this blog post, I imagine you), you will soon realise that hyperlinks are everywhere you go.

0 kommentar(er)

0 kommentar(er)